ETSI NFV ISG

has defined NFV architectural framework for CSP environment. ETSI

NFV architecture has MANO

(management & orchestration) components to provide VM life cycle

management capabilities. MANO consists of VNF(virtual

network function) manager, VNF

infrastructure manager (VIM) &

traditional EMS(element

management system). VNF is responsible of Application virtualization

layer events. VIM is responsible for virtual infrastructure layer

events, while EMS is monitors application performance.

As shown in

figure 1, ETSI MANO consists major management segments:

Figure 1

ETSI

MANO Correlation Requirement

In NFV

domain, Fault and performance management functionalities are

distributed over EMS, VNF manager and VIM. EMS collects Application

related counters, VNF manager collects VNF service related counter

and VIM collects virtual & physical infra related counters.

To derive end to end

performance issues ( as described below) Correlation among VNF Manager, EMS and VIM is highly required, as shown in figure 2.

- Call drops per VM,

- Application performance impact due to failure of particular CPU or,

- Utilization ratio of virtual CPU/physical CPU etc,

Figure

2

Correlation

challenges

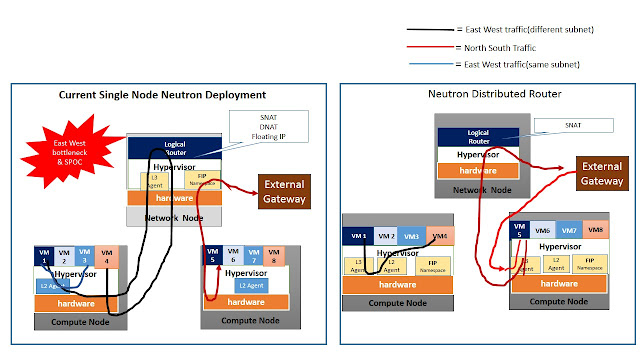

In

traditional Telco network, OSS/BSS platform capture data from

downstream EMS directly . Being tightly coupled with hardware, EMS

system has end to end view of underneath application and hardware.

In

NFV environment Application layer, VNF layer and Virtual Infra layers

are based on different technologies and thus have different

monitoring systems, different measurement & analytics tools and

different Ownership, as shown in figure 3.

Figure 3

Global VM ID as Correlation Key

The

challenge for correlation between Cloud performance data ( VNFM &

VIM) and Telecom measurements (EMS) is to find common parameters that

can serve as Correlation Keys.

Following

are two common attributes,

which can be used for correlation across NFV environment:

1) Event Time

stamp: time of event occurrence

2) VM_ID

(virtual machine ID): virtual machine ID, distributed in VNFD(VNF

descriptor).

To utilize

VM_ID as correlation key, VM_ID should be unique in entire NFV

deployment.

CSP should

enforce policy of having unique VM_ID for entire NFV deployment

including, NFV orchestration systems, VNF on-boarding, EMS systems,

SDN controller, VIM and all other involved tools, and systems.

At time of VM

instantiation, NFV orchestrator should obtain VM_ID from global

Inventory management. It should distribute VM_ID among NFV MANO

elements and downstream SDN controller, during VM instantiation as

part of VNFD(VNF Descriptor).

As part of

network policy, NFV MANO elements should able to change the VM_ID

during scenarios as Inter/Intra host live migration, VM evacuation,

etc . Henceforth NFV elements will use the unique VM ID during entire

VM lifecycle management.

Following

Figure shows the VM ID distribution, User Request can be manual

request from Dashboard Or API call from another system, as shown in

figure 4

Figure 4

USE CASE : VM_ID

based Fault Management Correlation

Following use

case describes need for correlation among Application EMS, & VIM

to assess performance impact of failure of Physical CPU’s scheduler

on VM application performance.

As shown

in figure 5:

- Application EMS sends call events to Analytics manager. Report IE(information element) contains VM_ID=ABCD, timestamp, Application ID= vMME Release code: Drop etc. Analytics manager calculates the KPI, and finds out call drops for VM_ID is exceeding 0.1% (KPI threshold) per hour.

- VNFI forwards virtualization layer & hardware related alerts to Analytics Manager.

- Correlation engine at Analytics Manager correlates the EM alerts and VNFI alerts, finds that VM_ID ABCD is observing physical CPU scheduler fault, which is resulting in increased drop calls.

- Analytics manager co-ordinates with Policy manager for resolution.

- Policy manager forwards rule to migrate the VM to new location for VM_ID ABCD.

- Analytics Manager Co-ordinates with Inventory Management to get hardware details for new VM. Hardware details include new VM location ( node, line card & VM number), RAM, CPU & Memory details as described in VM affinity rules in VNFD. New VM_ID will be based on new location.

- Analytics Manager will forward the details to VNFI manager.

- VNFI manager instruct hypervisor to spawn new VM, with VM ID as XYWZ.

Reference

- Network Functions Virtualization (NFV); Infrastructure Overview(GS NFV-INF 001)

- Network Functions Virtualization (NFV); Architectural Framework(GS NFV 002) Network Functions Virtualization (NFV); Management and Orchestration(GS NFV MAN 001)

- Network Functions Virtualization (NFV); Virtual Network Functions Architecture(GS NFV SWA 001)

- ETSI specification are available at http://www.etsi.org/technologies-clusters/technologies/nfv

This blog represents personal understanding of subject matter.